With the introduction of iPhone XR, every phone in Apple’s lineup now supports depth capture. But the XR is unique: it’s the first iPhone to do it with a single lens. As we were starting to test and optimize Halide for it, we found both advantages and disadvantages.

In this post we’ll take a look at three different ways iPhones generate depth data, what makes the iPhone XR so special, and show off Halide’s new 1.11 update, which enables you to do things with the iPhone XR that the regular camera app won’t.

Depth Capture Method 1: Dual Camera Disparity

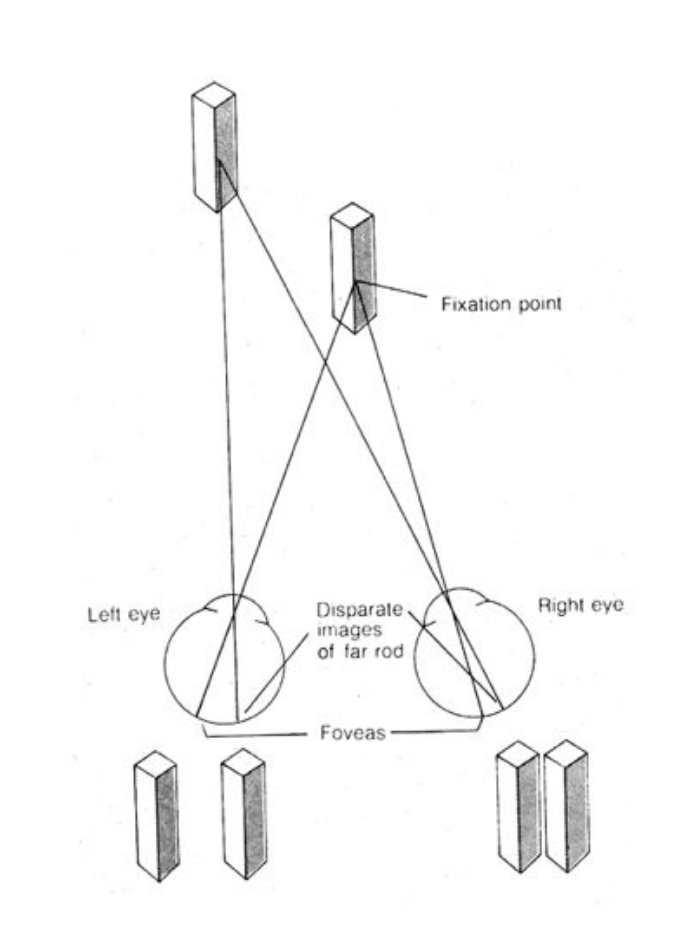

Humans perceive depth with the help of two eyes. Our eyes may only a few inches apart, but our brains detect subtle differences between image. The greater difference, or disparity, the closer an object.

The iPhone 7 Plus introduced a dual-camera system, which enables a similar way to construct depth. By taking two photos at the same time, each from a slightly different position, we can construct a disparity map.

There’s a lot of guesswork involved when matches images. Add video noise and things get even rougher. A great deal of effort goes into filtering the data, additional post processing that guesses how to smooth edges and fill holes.

This requires a lot of computation. It wasn’t until the iPhone X that iOS could achieve 30 frames a second. This all takes a lot memory. For a while, our top crash was running out of memory because the system was using most of it on depth.

Dual Camera Drawbacks

One limitation and quirk of this method is that you can only generate depth for the parts of the two images that overlap. In other words, if you have a wide angle and telephoto lens, you can only generate depth data for the telephoto lens.

Another limitation is that you can’t use manual controls. The system needs to perfectly synchronize frame delivery, and each camera’s exposure. Trying to manage these settings by hand would be like trying to drive two cars at once.

Finally, while the 2-D color data may be twelve megapixels, the disparity map is about half a megapixel. If you tried to use it for portrait mode, you’d end up with blurry edges that ruin the effect. We can sharpen edges by observing contrast in the 2D image, but that’s not good enough for fine details like human hair.

Depth Capture Method 2: TrueDepth Sensor

With the iPhone X, Apple introduced the TrueDepth camera. Instead of measuring disparity, it uses an infrared light to project over 30,000 dots.

However, depth data isn’t entirely based off the IR dots. Sit in a pitch-black room, and take a peek at the TrueDepth data:

Clearly the system uses color data as part of its calculations.

TrueDepth Drawbacks

One drawback of TrueDepth is sensitivity to infrared interference. This means bright sunlight affects quality.

Why not include a TrueDepth sensor on the back of the Xr? I think it’s three simple reasons: cost, range and complexity.

People will pay extra for Face ID, which requires the IR sensor. People will pay extra for a telephoto lens. People aren’t ready to pay a premium for better depth photos.

Add to that that infrared gets much worse at sensing depth over greater distances and the ever-growing camera bump people already resent and you can see why Apple would be rather hesitant to go with this solution for the rear-facing camera(s).

Depth Capture Method 3: Focus Pixels and PEM

In the iPhone XR keynote, Apple said:

What the team is able to do is combine hardware and software to create a depth segmentation map using the focus pixels and neural net software so that we can create portrait mode photos on the brand-new iPhone XR.

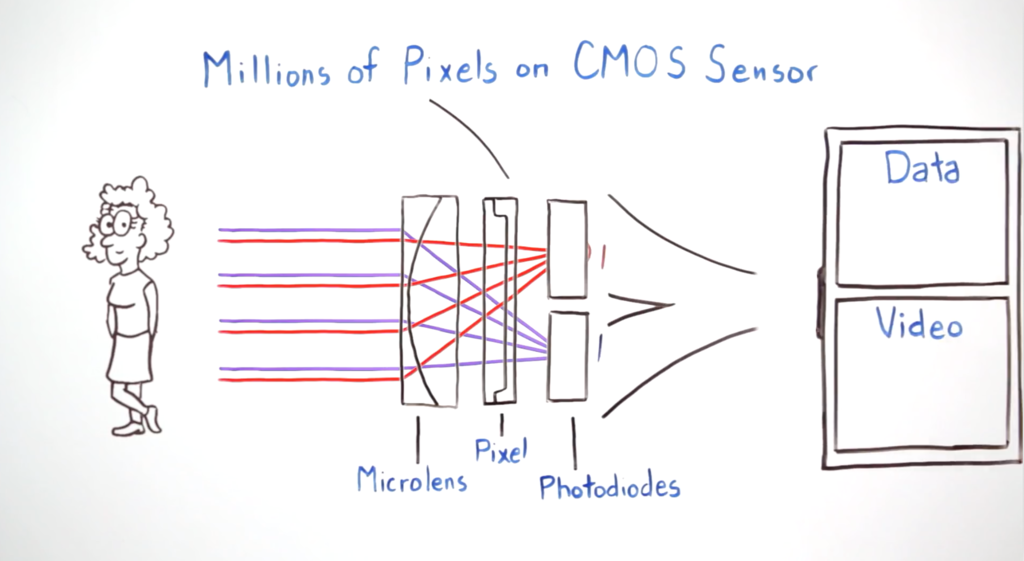

Apple’s marketing department invented the term “Focus Pixels.” The real term is Dual Pixel Auto Focus (DPAF) and they’re a common sight on cameras and phones today that first came to the iPhone with the iPhone 6.

DPAF was invented to find focus in a scene really fast, which is important when shooting video with moving subjects. However, the way it’s designed opens the door to disparity mapping.

Using them to capture depth is pretty new. The Pixel 2 was the first phone to capture depth on a single camera using DPAF. In fact, they published a detailed article describing exactly how.

In a DPAF system, each pixel on the sensor is made up of two sub-pixels, each with their own tiny lens. The hardware finds focus similar to a rangefinder camera; when the two sub-pixels are identical, it knows that pixel is in focus. Imagine the disparity diagram we showed earlier, but on an absolutely minuscule scale.

If you captured two separate images, one for each set of sub-pixels, you’d get two images about one millimeter apart. It turns out this is just enough disparity to get very rough depth information.

As I mentioned, this technology is also used by Google, and the Pixel team had to do a bunch of work to get it in a usable state:

One more detail: because the left-side and right-side views captured by the Pixel 2 camera are so close together, the depth information we get is inaccurate, especially in low light, due to the high noise in the images. To reduce this noise and improve depth accuracy we capture a burst of left-side and right-side images, then align and average them before applying our stereo algorithm.

Disparity Maps on the iPhone XR are about 0.12 megapixels: 1/4 the resolution of the dual camera system. That’s really small, and it’s why the best portraits coming out of iPhone XR owe much to a neural network.

Portrait Effects Matte

This year Apple introduced an important feature that significantly improves the the quality of portrait photos, and they called it “Portrait Effects Matte,” or PEM.

We’ve talked about it extensively before, but to summarize, it uses machine learning to create a highly detailed matte that’s perfect for adding background effects. For now, this machine learning model is trained to find only people:

With PEM, Apple is feeding the 2D color image and 3D depth map into a machine learning system, and the software guesses what the high-resolution matte should look like. It figures out which parts of the image are outlines of people, and even takes extra care to preserve individual hairs, eyeglasses, and other parts that often go missing when a portrait blurring effect is applied.

Portrait mode photos have always looked pretty good. PEM makes them look great. It’s powerful enough to make the iPhone XR’s very low resolution depth data reliably good.

That’s why the camera app on iPhone XR refuses to take depth photos unless it ‘sees’ a person. As of iOS 12.1, PEM is only trained to recognize people; this can absolutely change in the future, even with a software update.

Without PEM, the depth data is a bit crude. Together with PEM, it creates great photos.

So, does the iPhone XR take better portrait photos?

Yes and no. It seems the iPhone XR has two advantages over the iPhone XS: it can capture wider angle depth photos, and because the wide-angle lens collects more light, the photos will come out better in low light and have less noise.

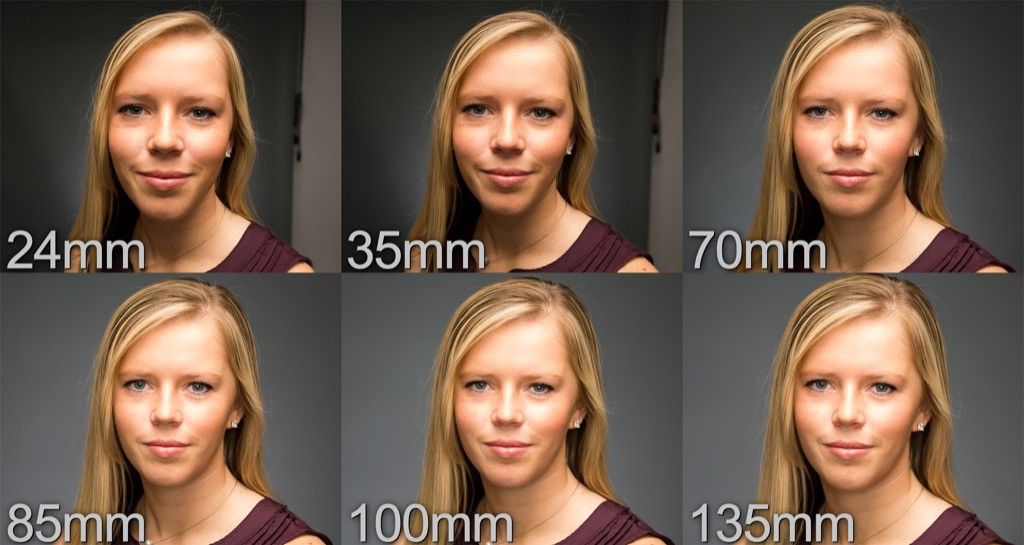

Remember how we said the XR’s Portrait mode is only available on human portraits? When it comes to faces, you never want to photograph a person up close with a wide angle lens, as it distorts the face in an unflattering way:

That means you really have to take portraits on the iPhone XR from medium shot (waist-up). If you want a close-up headshot, kind of like the iPhone XS, you’ll have to crop, which means you’ll lose a lot of resolution. A wide angle lens isn’t always a plus.

But yes, the lens lets in significantly more light than the telephoto lens on the iPhone XS. That means you’ll see less noise reduction (that’s the non-existent ‘beauty filter’ people thought they saw), and generally get more detail. On top of that, the sensor behind the XR and XS wide-angle lenses is about 30% larger than the one behind the telephoto lens, allowing it to gather even more light and detail.

So yes: sometimes, the iPhone XR will take nicer-looking Portrait photos than any other iPhone, including the XS and XS Max.

But most of the time, the XS will probably produce a better result. The higher fidelity depth map, combined with a focal length that’s better suited for portraiture means people will just look better, even if the image is sometimes a bit darker. And it can apply Portrait effects on just about anything, not just people.

As to why Apple isn’t letting you use Portrait mode on the iPhone XS with its identical wide-angle camera, we have some ideas.†

Halide 1.11 Brings Portrait Effects to iPhone XR

It’s fun to ‘unlock’ powerful features of a phone that people previously had no access to. Bringing Portrait Effects Matte to iPhone X really blew some people away.

Now we get to do that again: Halide 1.11 will let you take Portrait mode photos of just about anything, not just people.

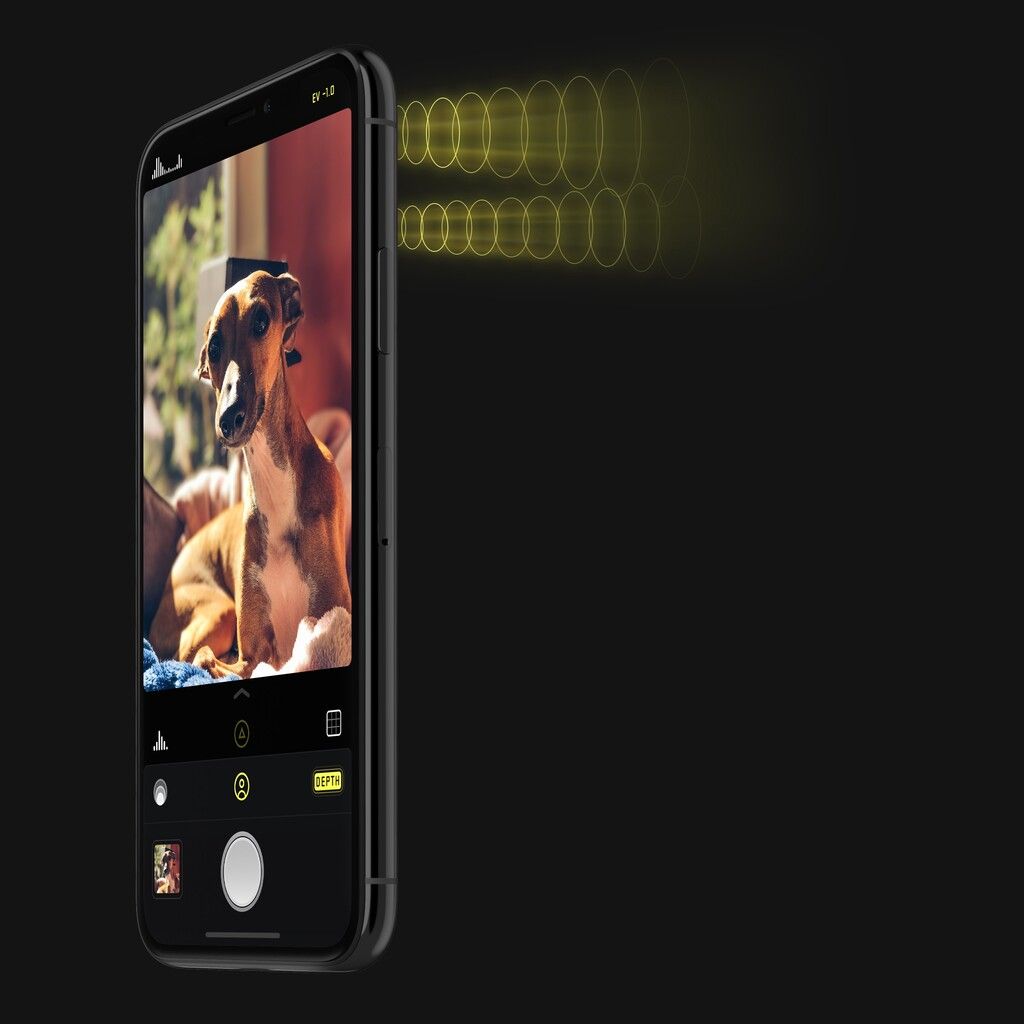

We do this by grabbing the focus pixel disparity map and running the image through our custom blur. When you open Halide on iPhone XR, simply tap ‘Depth’ to enable depth capture. Any photo you take will have a depth map, and if there’s sufficient data to determine a foreground and background, the image will get beautifully rendered bokeh, just like iPhone XS shots.

You’ll notice that enabling the Depth Capture mode does not allow you to preview Portrait blur effect or even automatically detect people. Unfortunately, the iPhone XR does not stream depth data in realtime, so we can’t do a portrait preview. You’ll have to review your portrait effects after having taken the photo, much like the Google Pixel.

Is it perfect? No — as we mentioned, the depth data is lower quality than dual-camera iPhones. But it’s good enough in many situations, and can be used to get some great shots:

Eager to try it out? Halide 1.11 has been submitted to Apple and will be out once it passes App Store review.

Now the iPhone XR has finally lost that one small flaw: its inability to take a great photo of your beautiful cat, dog, or pet rock. We hope you enjoy it!

†: I suspect that Apple had a bit of a UI design conundrum on its hands: try telling users that Portrait mode suddenly would no longer work if you zoom out a bit unless you point your phone at a face.

That, plus the likely addition of more ‘objects’ in the Portrait Effect Matte machine learning model means we’ll probably see wide-angle Portrait Effects coming in the future to dual-camera iPhones.